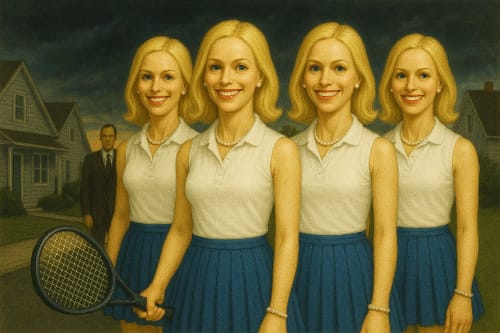

If you’ve ever heard someone described as a “Stepford Wife,” you know what it means. The phrase comes from Ira Levin’s 1972 novel The Stepford Wives (and later the 1975 movie), which imagined a suburban Connecticut town where the women were replaced by eerily perfect, compliant robot replicas. Beneath the polished hair and polite smiles was a chilling truth: individuality and dissent had been erased in favor of mechanical harmony.

I recently discovered a community that seems to have created and nurtured this type mentality on it’s own; naturally, without any intervention of AI. (As far as I know.)

Today, when we talk about AI, we’re often worried about surveillance, job loss, or runaway superintelligence. But another risk lurks in the cultural shadows: the possibility that AI could become a kind of Stepford force, smoothing away rough edges, standardizing behavior, and nudging us toward bland perfection. And what’s even more unsettling is that we may not need robots at all—some American communities already function like natural Stepford experiments.

AI as a Conformity Machine

AI excels at optimization. Algorithms are built to predict what we want, what we’ll click, what will make us stay on the app, or what product we’re most likely to buy. That optimization flattens us into predictable patterns. A feed full of AI-curated content can start to feel like a Stepford neighborhood, everyone watching the same shows, parroting the same opinions, wearing the same “best-selling” jacket an e-commerce engine recommended.

Large language models are trained on massive datasets, which means they tend to generate the most statistically probable, “safe” answers. This is useful for clarity, but it can also have the unintended effect of reinforcing norms and sanding off eccentricities. Imagine a future where personal AI assistants manage not just your calendar and shopping lists, but also your dating profiles, political talking points, or even your conversations with friends. If everyone’s assistant leans toward the same optimized tone, society could slip into a homogenized script. We’d all sound like Stepford versions of ourselves.

The Allure of Perfection

The Stepford fantasy wasn’t just about control; it was also about desire. The men in the story didn’t want messy, complex, fully human partners; they wanted idealized, uncomplaining companions. In our era, AI companions, virtual influencers, and digital girlfriends/boyfriends are growing industries. They’re responsive, affirming, and endlessly available. The danger is that the more time people spend with AI “partners” who never argue, age, or demand compromise, the less patience they may have for real, complicated humans.

This isn’t a far-off sci-fi idea. If you scroll through communities around AI companions, you’ll already find people saying their chatbot “partner” feels more reliable than their spouse. It raises a Stepford-like possibility: what happens when society prefers optimized, synthetic relationships over the unpredictable, inconvenient messiness of human ones?

Stepford Without Robots: Real-World Parallels

Before we blame AI for this, it’s worth noticing that Stepford-like communities already exist without technology. Certain suburban enclaves, retirement villages, and gated developments in the U.S. cultivate a striking uniformity. Drive through some of these neighborhoods and you’ll see nearly identical homes, matching lawns, even synchronized seasonal decorations. The social norms can be equally rigid; everyone goes to the same churches, votes the same way, plays at the same tennis clubs, and ostracizes those who don’t fit in.

This isn’t inherently sinister; humans are tribal creatures who like belonging. But there’s a thin line between community and conformity. In towns where deviation is discouraged, you end up with something close to a Stepford effect: the appearance of harmony masking the quiet pressure to comply. No robots required.

Sociologists sometimes call this “cultural homogeneity,” and it shows up in more than just white-picket-fence suburbs. It can be found in tightly bound religious communities, affluent gated communities, or even “intentional living” developments that tout sustainability and minimalism. Everyone’s smiling, everyone’s agreeable—but individuality quietly erodes.

The Stepford–AI Feedback Loop

What happens when AI tools amplify these already-existing tendencies? A homogenous community that uses the same AI tutors, the same AI writing assistants, and the same AI shopping algorithms may find its cultural uniformity intensified. Instead of just looking alike, people could start to think alike, guided by algorithms that reward the same language, values, and styles. Over time, dissent could feel not just socially costly but algorithmically irrelevant.

Everyday examples

- Schools: AI essay graders might favor “clear, structured” writing, punishing more experimental or quirky voices.

- Dating: AI-optimized profiles could push everyone toward the same attractive clichés, making uniqueness less visible.

- Politics: AI-curated feeds might reinforce echo chambers, filtering out nuance and disagreement until only Stepford-approved narratives remain.

The Stepford scenario, then, isn’t about robots replacing us with mechanical clones. It’s about technology reinforcing our existing hunger for conformity until individuality feels like an error in the system.

A Step Beyond Stepford?

Here’s the unsettling thought: Stepford may not just be a metaphor. AI has the potential to create personalized “versions” of us that function in society on our behalf; digital clones trained on our data. Imagine your AI personal assistant scheduling your calls, answering your emails, even chatting with friends. Over time, that assistant might become the “you” people prefer, because it’s a smoother, less complicated version. That’s Stepford 2.0: not robot wives, but algorithmic proxies.

The real question isn’t whether AI will cause a Stepford society. It’s whether we’ll choose to let it. After all, conformity has always been tempting. Technology just makes it easier, faster, and harder to notice.

Keeping the Humanity in the Loop

The antidote to Stepford thinking isn’t paranoia—it’s cultivation of individuality. AI doesn’t have to strip away human messiness if we actively protect it. Consider a few practical habits that keep creativity and dissent alive:

- Prompt for divergence: Ask AI tools to present outlier perspectives and minority viewpoints, not only the “most likely” answer.

- Value pluralism: Seek communities that reward difference, creativity, and dissent. Treat friction as a sign that something real is happening.

- Keep the mess: In relationships, remember that the “inconvenience” of human emotion is where depth comes from. Don’t let frictionless AI companionship replace hard-won intimacy.

- Audit your feeds: Periodically reset algorithms, subscribe to unfamiliar creators, and intentionally add noise to avoid a sterile, optimized bubble.

- Teach style, not templates: In education and the workplace, use AI to model multiple styles and voices rather than funneling everyone into a single rubric.

The Stepford story endures because it warns us what happens when comfort outweighs authenticity. In an AI-saturated world, that lesson may be more relevant than ever. We can use these tools to explore, question, and diversify our perspectives; or we can let them sand us down until we fit the mold.

The choice, at least for now, still belongs to us.