by Patrix | Aug 25, 2025

Most folks picture coding as typing inscrutable symbols at 2 a.m., praying the error goes away. Vibe coding offers a friendlier path. Instead of wrestling with syntax, you describe the feel of what you want—“cozy recipe site,” “retro arcade vibe,” “calm portfolio gallery”—and an AI coding assistant drafts the code. You steer with words, not semicolons.

It feels like jazz improvisation rather than marching-band precision. You hum the tune, the system riffs, and together you find something that works.

What exactly is vibe coding?

Traditional programming demands exact instructions. Misspell “color” in CSS and the styles vanish. Vibe coding flips that expectation. You set intent and tone in natural language and let an AI model generate the scaffolding—HTML, CSS, JavaScript, even backend stubs. You don’t memorize every rule; you curate the experience.

Example prompts a creator might use:

- “Make me a minimalist landing page with soft earth tones, large headings, and a gentle fade‑in on scroll.”

- “Design a playful to‑do app with hand‑drawn icons and a doodle notebook feel.”

- “Build a one‑screen arcade game where a frog hops across floating pizza slices.”

If the first result isn’t quite right, you iterate conversationally: “Make it warmer,” “simplify the layout,” “use a typewriter font,” “slower animation.”

Why creatives and retirees love it

Vibe coding lowers the barrier between idea and execution. If you can describe a mood, you can prototype a site or app. That’s huge for artists, writers, gardeners, cooks, and curious retirees who have projects in mind but don’t want to wade through arcane tutorials. It turns software creation into something closer to sketching: quick, expressive, and forgiving.

A tiny anecdote: the first time I tried vibe coding a recipe card, I typed, “Give me a farmhouse kitchen vibe with wood textures and big photo slots.” The AI delivered a working layout in seconds. I nudged it—“larger headings,” “cream background,” “add a whisk icon to the search bar”—and the page snapped into place. No CSS rabbit holes, no plugin tangles.

How it works in practice

Think of a three‑step loop:

- Describe the outcome

Explain the feel, audience, and basic features: “A gallery portfolio for a mixed‑media artist, moody lighting, grid layout, lightweight animations, and an about section.” - Let the AI draft the code

The assistant produces structured files. Many tools can also scaffold assets, placeholders, and simple interactions. - Refine by vibe

You nudge the tone rather than micromanage hex codes: “More airy,” “less shadow,” “rounded corners,” “slower scroll.” The AI regenerates targeted parts of the code.

A 10‑minute vibe‑coded mini‑project (try this)

Goal: A single‑page “digital zine” for a weekend workshop.

Prompt 1:

“Create a one‑page site called ‘Saturday Zine Lab.’ Friendly, zine‑like typography, off‑white background, wide margins, and a collage feel. Include three sections: About, Schedule, and What to Bring. Add subtle paper texture, large headings, and a footer with contact links.”

Prompt 2 (tweak the feel):

“Make the headings chunkier, add a gentle hover wiggle on links, and use a simple grid for the Schedule with times on the left. Keep the performance light.”

Prompt 3 (final polish):

“Improve readability with 18px body text and 1.6 line height. Increase contrast slightly, and add a tiny torn‑paper divider between sections.”

Load the result and you’ve got a charming page ready for real content, assembled by describing the mood.

Why vibe coding is trending now

- Better AI models: Modern coding assistants interpret fuzzy language far more reliably than earlier tools. They can bridge the gap between intention and implementation.

- A hunger for playful creation: After years of “AI for spreadsheets,” people want tools that feel like paintbrushes. Vibe coding rewards curiosity.

Upsides you’ll notice

- Accessibility: You can build without mastering syntax. A good prompt becomes your design brief.

- Speed: Prototypes move from idea to screen in minutes, which is perfect for testing concepts.

- Creative flow: You stay in the “what it should feel like” headspace, instead of context‑switching into error logs.

Trade‑offs to consider

- Hidden complexity: Generated code may be messy or heavier than necessary. Great for personal projects, not always ideal for long‑term maintenance.

- Black‑box risk: If you don’t inspect the output, you might ship inefficiencies or minor security issues.

- Skill dilution: If you rely entirely on vibe, your debugging muscles won’t grow. A healthy balance helps—treat the AI as a collaborator, not a crutch.

Practical safeguards

- Ask for clarity: “Use semantic HTML,” “limit external dependencies,” “explain unusual code choices in comments.”

- Keep it small: Favor lean components and minimal libraries.

- Inspect once: Glance through the output, especially forms, scripts, and any place user input is handled.

- Version control: Save each iteration, so you can roll back if a regeneration goes sideways.

No‑code vs vibe coding

No‑code platforms give you templates and drag‑and‑drop building blocks. Vibe coding generates original scaffolds from your description. The difference feels like choosing from a menu versus asking a chef to make something that matches your taste. Both are useful; vibe coding just offers more room to invent.

Where this could go next

- Personal memoir sites: “Scrapbook style, Polaroid frames, large pull quotes, gentle page turns.”

- Art portfolios: “Gallery feel, spotlighted images, keyboard navigation, minimal chrome.”

- Micro‑business pages: “Simple services page, friendly pricing cards, contact form, warm colors, accessible and fast.”

- Playful experiments: “Underwater jazz‑club website”—because creativity grows when you let yourself play.

The bigger picture

Vibe coding doesn’t replace engineering; it widens the on‑ramp. Professionals will still refine, optimize, and secure systems. But more people will finally ship the projects they’ve imagined for years. That’s a cultural shift: from “I wish I could build that” to “I can try this today.”

If the internet’s first phase rewarded precision, the next phase might reward intention. When you can tell a computer how something should feel, you free up energy for ideas—and that’s where the fun begins.

by Patrix | Aug 11, 2025

On August 7, 2025, OpenAI officially unveiled GPT-5, its flagship successor to GPT-4, GPT-4.5, GPT-4o, and the O-series models. What makes this so exciting isn’t just raw power—it’s the model’s polished intelligence, uncanny adaptability, and the sense it wants to collaborate, not just respond.

Why GPT-5 Is a Game Changer: Smarter, Smoother, More Attuned

GPT-5 isn’t merely bigger—it’s wiser and more agile. OpenAI layered it with a real-time router, seamlessly choosing between fast answers or deep reasoning depending on the ask—without user input required. Across coding, math, writing, health advice, and visual perception, GPT-5 delivers state-of-the-art performance—what OpenAI terms “expert-level responses”.

Hallucinations? Sharply reduced. Instructions? Followed more precisely. Sycophancy? Dialed way back. GPT-5 aims to “refuse unsafe queries gently while providing more helpful answers when possible”. It’s faster and more accurate across everyday tasks like writing, coding, and health conversations.

New Capabilities That Blend Power with UX Ease

GPT-5 redefines what users expect from AI:

- Unified access across free, Plus, Pro, enterprise, and educational users—no more confusing model picker menus.

- Personality options—choose tones like “Cynic,” “Listener,” or “Nerd”—and UI themes, plus voice enhancements.

- Long-context memory and reasoning—handles inputs up to 272,000 tokens and 128,000 tokens in output, delivering coherence across epic conversations.

- Safety-first design through over 5,000 hours of testing, improved uncertainty handling, and safe completion logic.

- Multimodal prowess—text and image inputs (and in some accounts, even audio/video support), making GPT-5 more versatile than ever.

For Developers: A Toolkit of Precision and Power

Coders, rejoice. GPT-5 is designed to be a true coding partner:

- Top-tier scores on SWE-bench Verified (74.9%) and Aider polyglot (88%) benchmarks.

- Sharper tool calling—accuracy, error handling, preambles between calls, and seamless chaining in complex tasks.

- New API controls like

verbosity (low/medium/high) and reasoning_effort (including a nifty “minimal” fast mode) for customizable trade-offs. - Three API tiers—GPT-5, GPT-5-mini, GPT-5-nano—offer flexible pricing and performance tiers.

- Impressive benchmarks on τ²-bench telecom (96.7% tool-driven accuracy) and long-context retrieval tasks (89% accuracy across 128K–256K tokens inputs).

Real-World Rollouts & Partnerships

This isn’t theory—GPT-5 is already live. It replaced past models like GPT-4o and GPT-4.5 across ChatGPT. Microsoft is embedding GPT-5 across Copilot and services; whispers say Apple will integrate it into Apple Intelligence features.

A Word on Costs & Environmental Footprint

Power costs energy. GPT-5 eats around 18 watt-hours for a medium-length reply—higher than GPT-4o—though that’s expected for a model this capable. As AI scales, energy consumption and carbon footprints remain real concerns, and GPT-5 offers no free pass.

Early Reception: Cheers and Caution Flags

Many users and creators celebrate GPT-5’s polish and power. Publications like Wired and Tom’s Guide applaud its performance, personalization, and integration with Gmail/Calendar.

But not all feedback is glow—some users found GPT-5’s responses “flat” compared to GPT-4o, and early routing glitches caused inconsistency. OpenAI has since fixed the router and pledged to restore GPT-4o access for Plus users while raising personalization options.

MIT Technology Review called GPT-5 “a refined product more than a transformative leap”—not revolutionary, but polished and purpose-driven. Which, for creative and independent thinkers, may well be exactly what the moment demands.

What GPT-5 Means for the Future of AI

GPT-5 signals a shift from novelty to nuance. It’s not just about raw intelligence—it’s about steerability, accessibility, context-awareness, and integration. We’re seeing:

- AI that adapts to you, not generic prompts.

- Agents embedded seamlessly in workflows—email, code, projects, calendars—with long memory.

- Developer tools that let builders refine speed, depth, cost, and tool orchestration.

- A model that knows when to think fast and when to dig deep, intuitively and elegantly.

In short, GPT-5 isn’t a toy—it’s a collaborator. We’re inching closer to Artificial General Assistance: AI that generates, *reasons*, and *works with* us—smart, safe, and stylish.

Let me know if you’d like to spin in comparisons with other models, creative use cases for artists and retirees, or SEO tips for long-form AI content.

by Patrix | Aug 8, 2025

Open-weight AI Models have arrived! OpenAI just released something that feels a bit like a plot twist: their first open-weight model family in over five years. It’s called gpt-oss, and if you’ve been waiting for a powerful, transparent, and commercially usable large language model—this one might just be your new favorite toy.

So what is it, why does it matter, and how can you actually use it?

What is gpt-oss?

At the heart of the release is gpt-oss-120b, a 117-billion parameter model using a Mixture-of-Experts (MoE) architecture. That means instead of having all its neurons fire at once (as dense models do), it only activates 4 out of 128 “experts” per layer for each token. Think of it like having a panel of 128 specialists, and calling on the 4 most relevant ones for each word.

That makes gpt-oss-120b both powerful and efficient. It delivers performance on par with OpenAI’s internal o4-mini model—but can run inference on a single 80 GB GPU. That’s a big deal.

There’s also a smaller sibling, gpt-oss-20b, with around 21 billion parameters and designed to run on desktop GPUs with just 16 GB of VRAM. This one’s a great fit for local deployments and smaller custom AI tools.

Why This Release Matters

Here’s the kicker: OpenAI hasn’t released open weights since GPT-2 in 2019. That means for half a decade, they’ve kept their most powerful models tightly guarded—understandable, but frustrating for developers and researchers who wanted to tinker, fine-tune, and self-host.

gpt-oss changes that.

It’s released under the Apache 2.0 license, which is remarkably permissive. You can use it commercially, modify it, retrain it, and even ship it as part of a product—without OpenAI looking over your shoulder.

This opens the door for startups, indie developers, educators, and researchers to build powerful, privacy-respecting tools with serious reasoning capabilities.

Transparent Reasoning, Tested Safety

One of the more impressive features: visible chain-of-thought (CoT) reasoning. That means you can ask the model to “show its work” as it solves problems, thinks through logic, or reasons its way to an answer. It’s a huge plus for applications in education, healthcare, law, and anywhere else where AI transparency is non-negotiable.

OpenAI also ran the model through their Preparedness Framework, testing for biosecurity and cybersecurity misuse risks—even under adversarial fine-tuning. The model did not show dangerous capabilities under those conditions. So it’s not just powerful—it’s responsibly released.

Performance in the Wild

OpenAI says gpt-oss-120b holds its own on serious benchmarks:

- Competitive programming (Codeforces)

- Math challenges (AIME)

- Medical reasoning (HealthBench)

- Tool-using workflows like Python scripting and web searches

And despite being a sparse MoE model, it can do all this on a single 80 GB GPU. You could run it on-prem, or spin it up in the cloud using platforms like Azure, AWS, Hugging Face, or Databricks. Several of these partners are already deploying the model natively.

Why Developers Should Care

This isn’t just a research toy—it’s a production-ready LLM. A few reasons you might want to play with it:

- Efficiency at scale: The MoE structure means you get 117B-parameter performance without 117B-parameter costs.

- Customizable reasoning depth: You can select low, medium, or high-effort chain-of-thought modes to balance accuracy vs latency.

- Truly open weights: Download it. Fine-tune it. Ship it.

- Cloud and local options: From a workstation to a cluster, you’ve got deployment flexibility.

The Specs (in Plain English)

| Feature | gpt-oss-120b |

|---|

| Total Parameters | ~117 Billion |

| Transformer Layers | 36 |

| Experts per Layer | 128 |

| Active Experts per Token | 4 |

| Architecture | Sparse Mixture-of-Experts (MoE) |

| GPU Requirement | Single 80 GB GPU |

| License | Apache 2.0 (open for commercial use) |

| Chain-of-Thought Output | Transparent and configurable |

| Safety Testing | Passed adversarial misuse evaluations |

A New Chapter for OpenAI?

OpenAI releasing gpt-oss under open weights might be one of the more quietly transformative moves in the AI ecosystem this year. It brings them into closer philosophical alignment with the open-source movement—while still maintaining some safeguards around training data privacy.

Whether you’re building a personal coding assistant, a healthcare chatbot, or an internal enterprise tool, gpt-oss gives you a transparent, fast, and smart foundation to build on.

And this time, it’s yours to keep.

Want to explore what gpt-oss can do? Stay tuned—we’ll be publishing fine-tuning guides, use case experiments, and performance comparisons right here on ArtsyGeeky.

by Patrix | Aug 4, 2025

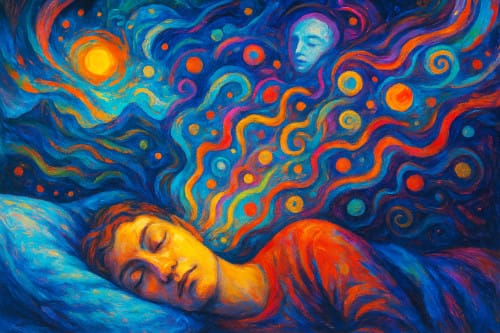

Ever had that dreamy moment when you’re just starting to doze off, and suddenly your mind floods with strange images, sounds, or ideas that seem to come from nowhere? Maybe it felt like falling, or maybe you heard your name called out from the void—only to realize you’re still half-awake. Welcome to the weird and wonderful world of hypnagogia, the twilight state between wakefulness and sleep.

It’s a mental borderland where creativity blossoms, logic loosens, and the subconscious starts stretching its legs. Artists, inventors, and philosophers have long dipped into this semi-dream state for inspiration. And now, in a world driven by sleep science, cognitive hacking, and AI, hypnagogia is making a comeback.

What Is Hypnagogia?

Hypnagogia (pronounced hip-nuh-GO-jee-uh) refers to the transitional state your brain enters as you fall asleep. It’s the soft descent from conscious awareness to unconscious dreaming. Unlike REM sleep (when dreams get cinematic), hypnagogia tends to be more fragmentary, fleeting, and surreal—like the mind whispering to itself just before the lights go out.

Neuroscientifically, it’s marked by changes in brainwave activity: your alert beta waves start to give way to slower alpha and theta waves. You’re no longer fully awake, but not quite asleep either.

This state is often rich in:

- Visual hallucinations: Shapes, colors, faces, landscapes

- Auditory distortions: Echoes, single words, music, or whispers

- Physical sensations: The infamous “falling” feeling or sleep starts (called hypnic jerks)

- Creative thoughts: Sudden insights or strange mental associations

In other words, hypnagogia is a temporary suspension of the rules—your usual mental filters go offline just long enough for your inner world to get weird.

History’s Most Famous Half-Asleep Thinkers

Many brilliant minds throughout history have tapped into the hypnagogic state as a wellspring of creative insight.

- Salvador Dalí used what he called “slumber with a key”: he’d nap in a chair while holding a metal key over a plate. As he drifted off and dropped the key, the clatter would wake him—allowing him to grab whatever surreal images floated through his mind.

- Thomas Edison reportedly used a similar technique with ball bearings, a chair, and a tin plate. He believed this liminal state was the gateway to his best ideas.

- Mary Shelley, author of Frankenstein, described seeing her famous monster in a waking dream—not quite asleep, not quite awake.

- August Kekulé, a 19th-century chemist, had a hypnagogic vision of a snake biting its own tail—which led him to realize the ring structure of the benzene molecule.

What Happens in the Brain During Hypnagogia?

Brain imaging studies show that during hypnagogia, activity in the default mode network (DMN) ramps up. This part of the brain is associated with daydreaming, self-reflection, and internal narrative. Simultaneously, areas responsible for sensory processing remain semi-active, which explains why hypnagogic visions and sounds feel so vivid.

It’s also a time when executive function—the brain’s taskmaster—lets down its guard. That’s why your thoughts might jump from an old memory to a strange image to a new idea, all in a matter of seconds. It’s nonlinear, associative thinking at its finest.

Can You Use Hypnagogia for Creativity?

Yes—and people are doing it.

Some creatives actively try to induce hypnagogia through intentional napping, meditation, or lucid dreaming techniques. Here are a few practical ways to experiment:

1. Hypnagogic Journaling

Lie down with a journal nearby. As you begin to doze, try to stay aware of the images or phrases that come to mind. The moment you jerk awake or stir, jot down anything you remember—no matter how odd.

2. The Dalí Method (with a modern twist)

Try holding a small object—like a spoon or coin—in your hand while resting in a chair. Place a metal or ceramic plate underneath. As you drift off and drop the object, the sound will wake you. Capture whatever you saw or thought about.

3. Audio Triggers

Some people use soft ambient music, binaural beats, or even AI-generated soundscapes to help ease into the hypnagogic zone. Apps like Endel, Brain.fm, or YouTube’s sleep music loops can help coax the brain into theta territory.

Why Hypnagogia Matters in an Age of Hyper-Productivity

In a culture obsessed with productivity hacks, attention spans, and the “optimization” of every waking moment, hypnagogia is a gentle rebellion. It’s a reminder that creativity doesn’t always emerge from grinding harder—it can come from surrender, softness, and liminal mental space.

AI is increasingly being trained to simulate aspects of human creativity, but it’s the irrational, fluid, dreamlike logic of states like hypnagogia that remain uniquely human—for now. In fact, some researchers are studying hypnagogic imagery as a model for how future AI might mimic human associative thinking.

At the same time, scientists are looking into the potential therapeutic benefits of this state. Some early work suggests it might help in processing trauma, enhancing memory, or even supporting problem-solving during sleep onset.

Next time you’re nodding off and a bizarre image flashes across your mind—don’t shrug it off. That may just be your subconscious offering up a sliver of wisdom wrapped in weirdness.

Hypnagogia is a liminal zone, a soft corridor between two worlds. You don’t have to sleep through it. You can explore it, learn from it, and maybe even create something wonderful while dancing on the edge of dreaming.

by Patrix | Aug 1, 2025

In the rapidly accelerating world of artificial intelligence, a new kind of global competition is unfolding—one often termed the “AI race.” This isn’t merely about developing the smartest algorithms; it’s a strategic imperative with profound economic and geopolitical implications. At its core, this race highlights the critical role of AI infrastructure, particularly high-performance computing assets like GPUs, as a foundational national and economic asset, akin to traditional resources like energy or food.

Compute Sovereignty: The Strategic Imperative of AI Infrastructure

One of the most compelling concepts emerging from this global competition is “compute sovereignty.” This refers to the idea that a nation’s ability to access and control its own AI compute infrastructure—including advanced GPUs, robust data centers, and the sophisticated networks that connect them—is becoming a non-negotiable aspect of national security and economic independence. Just as energy security or food security has historically been paramount, control over AI compute is now seen as essential for any nation aiming to maintain its competitive edge and self-determination in the 21st century.

This perspective underscores a fundamental shift: AI is not just another technological advancement; it is the foundational layer for a new industrial revolution. Its transformative power will reshape every sector, from healthcare and finance to manufacturing and defense. Consequently, significant, sustained investment in this infrastructure is not an option but a necessity. Nations that secure their compute capabilities will be better positioned to foster innovation, attract talent, and dictate the terms of their digital future.

The Full AI Stack: From Chips to Models

Success in the global AI race demands innovation across the entire AI stack. This encompasses everything from the very building blocks of AI—advanced chip design and systems architecture—to the development of sophisticated large language models (LLMs) and their applications. Companies like NVIDIA exemplify excellence in the hardware layer, pushing the boundaries of GPU technology that powers the most complex AI computations. Their advancements enable the rapid training and deployment of ever-larger and more capable AI models.

Complementing this hardware prowess is the burgeoning field of model development. Firms like Mistral AI, particularly from Europe, are demonstrating leadership in this space, focusing on creating cutting-edge large language models. The interplay between these two levels—hardware innovation providing the necessary compute power and model development crafting the intelligent systems—is what truly drives the exponential progress we are witnessing in AI. Without robust infrastructure, even the most ingenious models cannot be realized; without innovative models, even the most powerful hardware remains an untapped resource.

The Democratizing Force of Open-Source AI

A crucial element influencing the trajectory of AI development is the rise and significance of open-source AI models. These models, freely available and modifiable by anyone, play a pivotal role in democratizing access to AI technologies. By fostering broader participation, open-source initiatives accelerate innovation significantly. They allow a multitude of developers, researchers, and companies—regardless of their size or resources—to build upon existing models, experiment with new ideas, and contribute to the collective advancement of AI.

Furthermore, open-source AI fosters healthy competition and can empower nations to build sovereign AI capabilities without becoming solely reliant on proprietary models developed by a handful of large tech companies. For regions like Europe, which possess a strong research base and a deep talent pool, leveraging open-source frameworks, as exemplified by Mistral AI’s strategy, presents a viable pathway to establish leadership and ensure technological self-sufficiency in the AI domain.

National Strategies for AI Leadership and Sovereignty

Achieving leadership in AI is not a passive endeavor; it requires deliberate national strategies and proactive industrial policies. Governments play an indispensable role in fostering an environment where AI companies can thrive. This includes direct investment in foundational research and critical infrastructure, incentivizing the build-out of compute infrastructure, and supporting robust R&D initiatives. Policies that encourage collaboration between academia and industry are also vital, ensuring that cutting-edge research translates into real-world applications and commercial successes.

Beyond infrastructure and policy, the importance of talent, ecosystem development, and data cannot be overstated. Attracting and retaining top AI talent is a continuous challenge that requires competitive educational systems, research opportunities, and attractive employment prospects. Building a vibrant AI ecosystem involves fostering startups, encouraging venture capital investment, and creating regulatory frameworks that balance innovation with responsible development. Access to diverse and high-quality data is also a critical bottleneck and a strategic asset, as data fuels the training of all advanced AI models.

For regions like Europe, which have a strong legacy in scientific research and a deep pool of technical talent, the challenge lies in translating this potential into commercial leadership. Robust investment in infrastructure and a supportive, forward-looking regulatory environment are key to unlocking Europe’s capacity to compete effectively in the global AI race and to cultivate European champions like Mistral AI.

Adapting to the Relentless Pace of Innovation

The pace of AI development is nothing short of breathtaking. What was considered cutting-edge yesterday may be commonplace tomorrow. This rapid evolution necessitates agility and constant adaptation from both private companies and governmental bodies. Staying competitive requires continuous learning, strategic foresight, and the courage to invest in emerging technologies and paradigms. The economic and geopolitical stakes could not be higher; leadership in AI promises immense advantages in productivity, national security, and global influence, while falling behind carries significant risks.

In essence, the AI race is a marathon with sprints. Nations that recognize AI compute as a strategic sovereign asset, invest across the full AI stack, embrace the democratizing power of open-source, and implement comprehensive national strategies for talent and ecosystem development will be the ones best positioned to harness the full potential of artificial intelligence for their prosperity and security.